"2/3 of leading global financial service firms have implemented the use of chatbots for their apps since the onset of the COVID-19 pandemic." (Forrester, 2020)

Chatbots are changing the customer service we have been used to. On the company's side, chatbots can save up to 80% of routine questions, helping businesses save on customer service costs (IBM, 2017). The chatbot market is also expected to grow to a whopping $102.29 billion by 2026 (Research and Markets, 2021).

What's important is that the chatbot arrives in your users/clients’ hands already tested and trained. In this way, it won't cause damages to the company's reputation and add even more workload on "human" customer service, as well as causing negative reviews of your service.

But how do you test a chatbot? Let’s see the main strategies and the tools that allow you to make a good impression with your customers/users.

Test the chatbot: what to keep in mind before the release

Let’s start with some aspects to keep into consideration before releasing a chatbot:

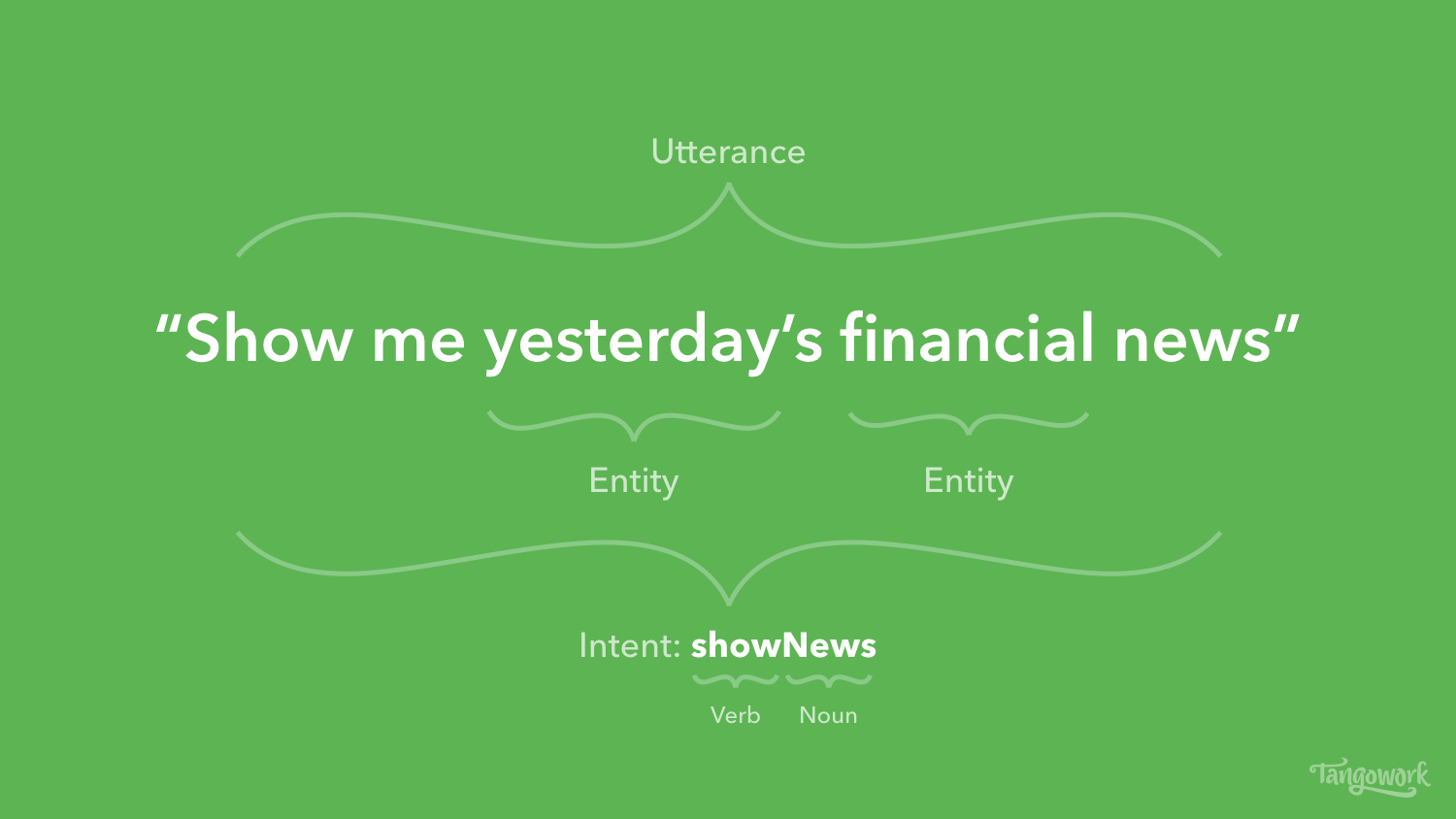

First, it is essential to be able to distinguish between intent and entity.

The intent is the user’s goal, what he has in mind while writing his question in the chatbot, what he really wants to find. The entity is the attribute, which is how the user describes his problem.

They are what the user is looking for and how he is looking for it. Understanding this difference allows you to correctly predict the chatbot training.

-

The chatbot must be able not only to have a memory of the conversation and the concatenation of questions, but it must as well be flexible enough to be able to jump from one question to another without a connection.

In addition, it is important that the chatbot knows how to end a conversation.

According to a study by Jain, Kumar, et al (2018), users expect an interaction similar to the one they would have with a human, including an introduction and a conclusion. A Chatbot, however, seems to be less prepared to end a conversation. Chaves and Gerosa (2018) pointed out that the chatbot needs to know when the conversation ends, for example with explicit statements from the user (thanks, or bye).

It is unavoidable, sooner or later the bot won't know how to answer. For this reason, it is crucial to have a strategy to cover those cases.

How to test a chatbot before the release

There are three methods to test the chatbot before the release: general test, product/service specific test and limits test. Completing these tests before the release would be idea because these tests show the status of the key points and allow you to understand which are the underlying issues. These tests should not stop after the release: planning new tests (even automated) will be equally important to ensure that the new versions do not affect the pre-existing features.

General test

Doing a general test allows you to understand the progress status and the correct functioning of the chatbot. If the chatbot does not respond correctly to the general test, it is useless to continue with further tests before fixing the existing problems.

In this phase, general questions are asked, for example greetings (so not even a question but a previous phase), and test if the chatbot welcomes the user. In fact, the role of a good bot is not only to answer the questions, but also to maintain a pleasant conversation flow. If the chatbot fails this step, the user will probably stop trying (if it gets released) or, in the worst case, leave the website. If your chatbot has already been released without running a general test, try checking the analytic metrics and see if users have not left after the first two exchanges.

Product/service specific test

After the greetings, you get into the conversation. The second step already goes into the product/service specifics on which the chatbot responds. While in the general test generic questions are asked or even only greetings, here we move into the specific product/service vocabulary. If your product happens to be a bank's website the questions will surely include transfers, balance and bank statements, for example “how long does an international transfer takes?” or “I need a bank statement”.

Test of the chatbot limits

If the first two tests go well, we can say that your chatbot answers correctly to the most common and significant expressions. Now we must test what happens when the user writes something irrelevant to the product or service to see if the chatbot can handle the conversations or it fails.

How well do these tests work?

Your team could face two problems while planning or running these tests:

- Developer confirmations bias, also called confirmation bias. “Confirmation bias is the tendency to search for, interpret, favor, and recall information in a way that confirms or supports one's prior beliefs or values. People display this bias when they select information that supports their views, ignoring contrary information, or when they interpret ambiguous evidence as supporting their existing attitudes" (source: Wikipedia definition of Confirmation bias).

- The lack of time to address these activities. We are all overwhelmed by the amount of tasks we need to carry out, and for sure the developer is not an exception. The chatbot testing activity is long and there isn’t always enough time to run it properly. However, this can cause the release of a bot that is not ready for it final users.

The solution for the chatbot pre-release test

If the internal team is in charge of the process, testing the chatbot can take a very long time. The team would need to add the testing tasks to developing and fixing the product. And that's not the only issue. When the developer and the tester are the same person, bias tend to become a problem. Also, developers don't always match the real user (especially if the real user lives in another country or belongs to a less tech-savvy generation).

Luckily, there is an extremely useful tool that help developers overcome these problems: crowdtesting. Once your marketing team has defined your target/ buyer persona and has given us an overview on the chatbot goal, we'll do the rest. UNGUESS's Quality Leader and Customer Success Manager will be in charge of selecting the testers who correspond to your real users and proceed with the chatbot testing and training. Within a couple of days, you will have your chatbot tested by your own users before its market release.